Anthropic Brings Automatic Memory to Claude Pro and Max Users

Anthropic today said it is updating the Claude chatbot with a new memory feature, which will put Claude on par with ChatGPT. With memory enabled, Claude will be able to recall past conversations.

Anthropic first added memory to Claude earlier this year, but with the initial implementation, Claude would only recall details when specifically asked. In August, Anthropic expanded the memory feature to allow Claude to automatically remember conversation details without a specific user request, and that functionality has been limited to Team and Enterprise subscribers.

Claude's memory functionality is now expanding to all paid users, so Pro and Max subscribers can use the feature. Max users can turn it on now, while Pro subscribers will get access "over the coming days."

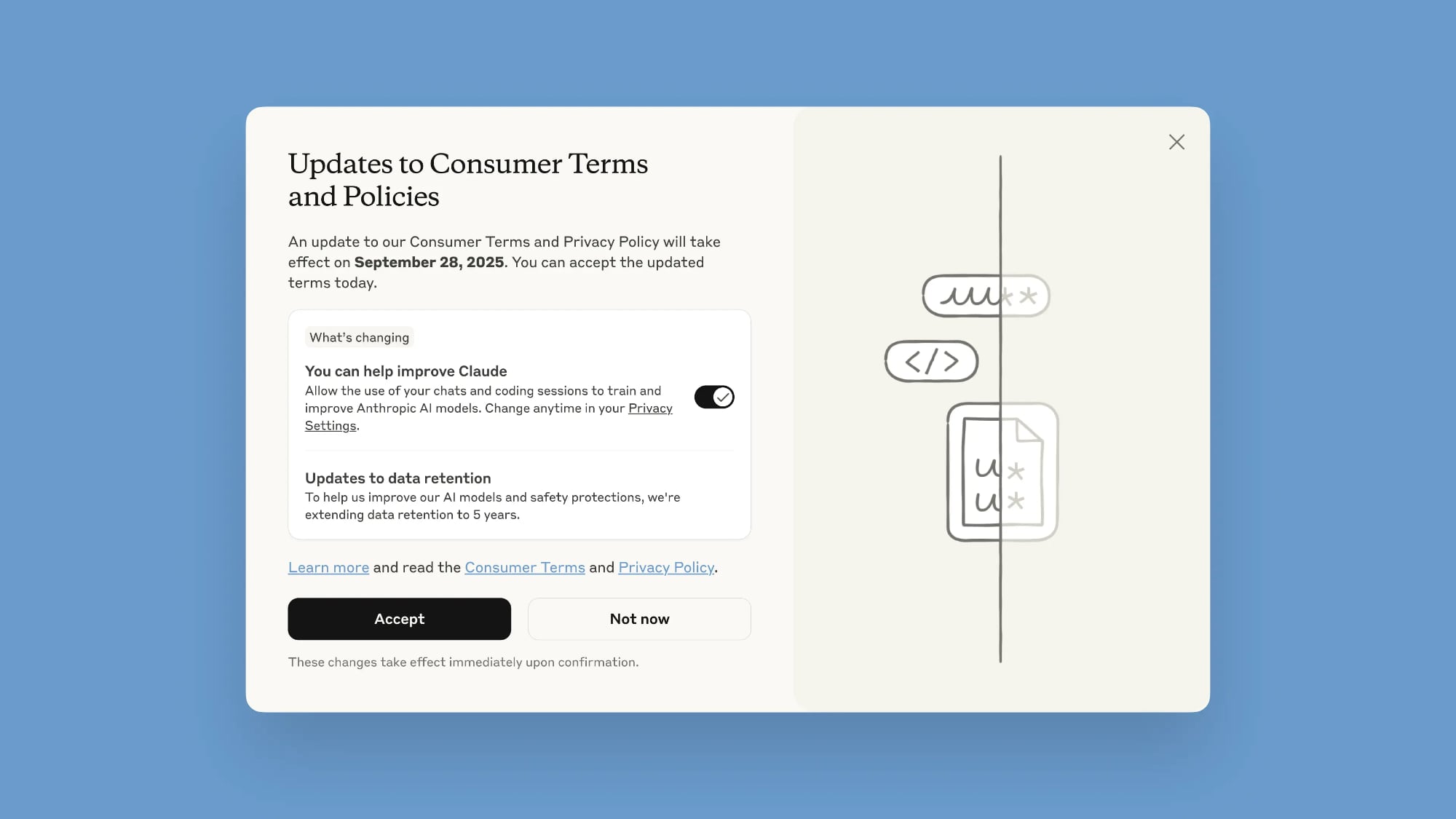

Memory is an opt-in feature that can be turned on in Claude's settings. There are options for "search and reference chats" and "generate memory from chat history." Claude offers an editable memory summary that users can view to see what Claude remembers from conversations.

In the projects section of Claude, each project will have a separate memory. The division ensures that different discussions remain distinct, allowing for separation of work and personal chats.

This article, "Anthropic Brings Automatic Memory to Claude Pro and Max Users" first appeared on MacRumors.com

Discuss this article in our forums

Anthropic first added memory to Claude earlier this year, but with the initial implementation, Claude would only recall details when specifically asked. In August, Anthropic expanded the memory feature to allow Claude to automatically remember conversation details without a specific user request, and that functionality has been limited to Team and Enterprise subscribers.

Claude's memory functionality is now expanding to all paid users, so Pro and Max subscribers can use the feature. Max users can turn it on now, while Pro subscribers will get access "over the coming days."

Memory is an opt-in feature that can be turned on in Claude's settings. There are options for "search and reference chats" and "generate memory from chat history." Claude offers an editable memory summary that users can view to see what Claude remembers from conversations.

In the projects section of Claude, each project will have a separate memory. The division ensures that different discussions remain distinct, allowing for separation of work and personal chats.

Tag: Anthropic

This article, "Anthropic Brings Automatic Memory to Claude Pro and Max Users" first appeared on MacRumors.com

Discuss this article in our forums